MEMORY DIM3 CODE

MEMORY DIM3 HOW TO

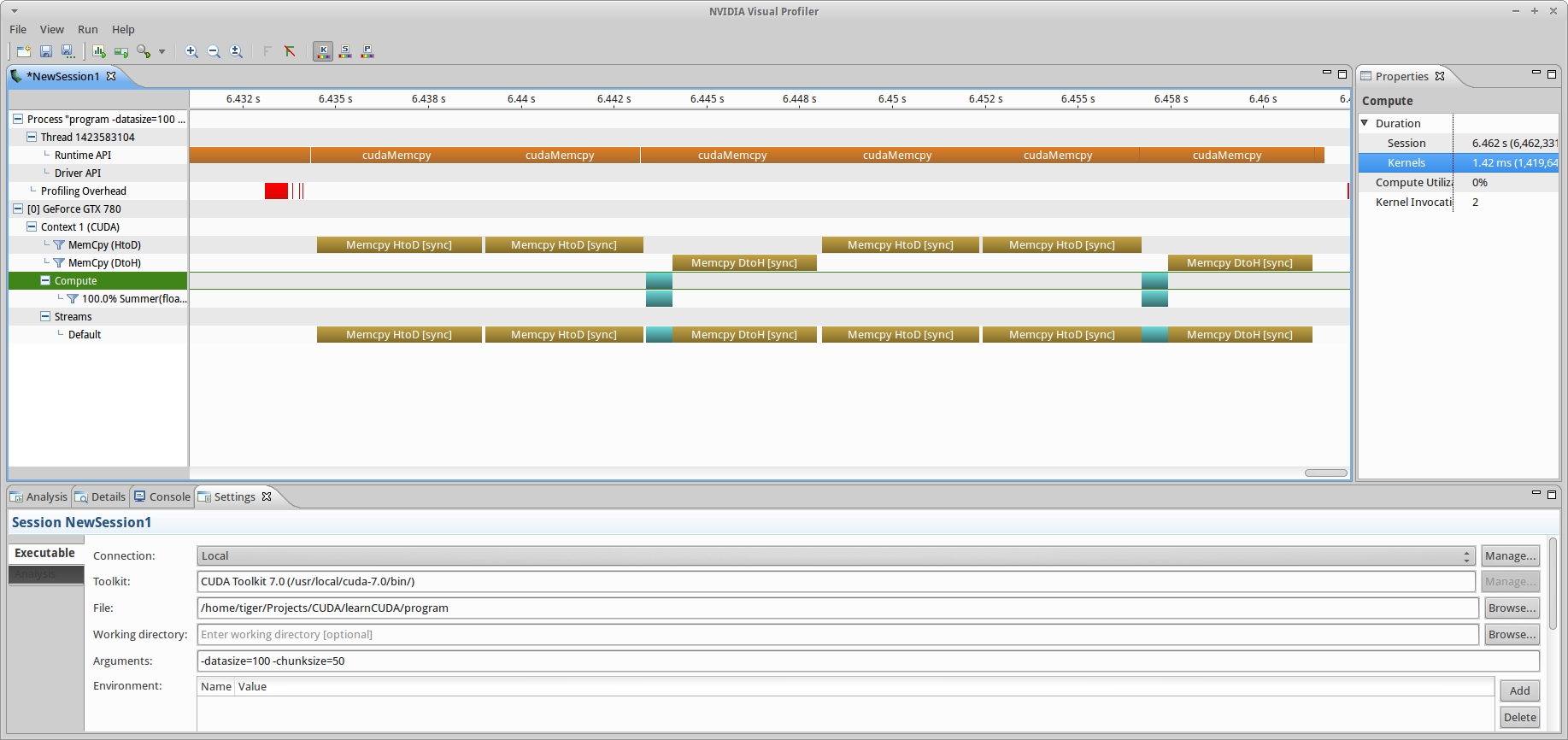

How to Implement Performance Metrics in CUDA C/C++.How to Query Device Properties and Handle Errors i.How to Optimize Data Transfers in CUDA C/C++ | Uti.How to Overlap Data Transfers in CUDA C/C++| Paral.What is “Constant Memory” in CUDA | Constant Memor.Printf("\nEnter c to repeat, return to terminate\n") Printf("Time to calculate results on GPU: %f ms.\n", elapsed_time_ms) Int Row = by * TILE_WIDTH + ty // global indicesĬudaMemcpy(C, Cd, size, cudaMemcpyDeviceToHost) ĬudaEventRecord(stop, 0) // measuse end timeĬudaEventElapsedTime(&elapsed_time_ms, start, stop ) Int bx = blockIdx.x // tile (block) indices

_global_ void gpu_matrixmult (int *Md, int *Nd, int *Pd, int Width) Guys the program that i'm working on is for matrix multiplication using non shared and shared memory, and it shows the same calculation time, if possible could you have a look at the code below thanks. Main system memory may be a significant bottleneck which is preventing the GPUįrom achieving more than 1.5x the processor performance. Spread between the GPU and CPU continues to widen with more elements. See, the GPU is faster when there are at least a million elements, and the the CPU is a 2.66 Core 2 Duo, while the graphics card is a GTX 280, Below is a graph ofĮxecution time it took my CPU against the amount of time it took my graphicsĬard.

Necessary to ensure that all data from all threads is valid before threads readįrom shared memory which is written to by other threads. Some problems will be forced to use global memory for thread communication. Shared memory is by far the fastest way, however due to it’s size limitations, It is possible, and many times necessary, for threads within the same block toĬommunicate with each other through either shared memory, or global memory. Of the Article In summing up this article,

0 kommentar(er)

0 kommentar(er)